NVIDIA GTC 2025: NVIDIA’s Roadmap for AI in Datacenter, Desktops, and Robots

The founding myth* of Levi’s Strauss is that it was the most enduring business to come out of the gold rush of 1849 by supplying rugged outwear to gold prospectors and miners. Similarly, during the AI infrastructure gold rush of the 2020’s, Nvidia emerged as the premier business by supplying GPUs to AI hyperscalers and large enterprises. At NVIDIA’s annual GPU Technology Conference (hence GTC), the company’s place atop the AI supply chain seems secure in the near to medium term after it showed off details of its Blackwell and Rubin roadmap for the next two years and (literally) sketched out expectations of Feynman beyond that.

It almost goes without saying that NVIDIA is powering most of the AI services that are being launched outside China (and probably some inside China, too). New design wins announced at GTC included GM for self-driving cars, and a mobile group led by T-Mobile, MITRE, and Cisco to start R&D on AI-native wireless network hardware, software and architecture for 6G.

NVIDIA’s Silicon Roadmap — and its durable networking and software advantage

NVIDIA CEO Jensen Huang was quite clear on why NVIDIA needs to detail its roadmap in advance: data center purchases are so expensive that they are planned years ahead of implementation, require CapEx allocation, site planning, and power sourcing.

Nvidia sees its GPUs as essentially “token factories” for generating revenue (somehow) from AI queries. The primary constraint for these factories is the amount of power you can get into the building and how effectively you can utilize that power across the GPU’s and networking gear that serves them. One way to do that is to stuff more transistors on GPUs and stuff more GPUs onto racks. To that end, NVIDIA noted that its current Blackwell architecture offers 40x more inference performance than its prior Hopper, and Jensen Huang joked that nobody should buy Hopper. Going forward, NVIDIA will offer even more ridiculously powerful** Vera Rubin in 2026, and Rubin Ultra in 2027.

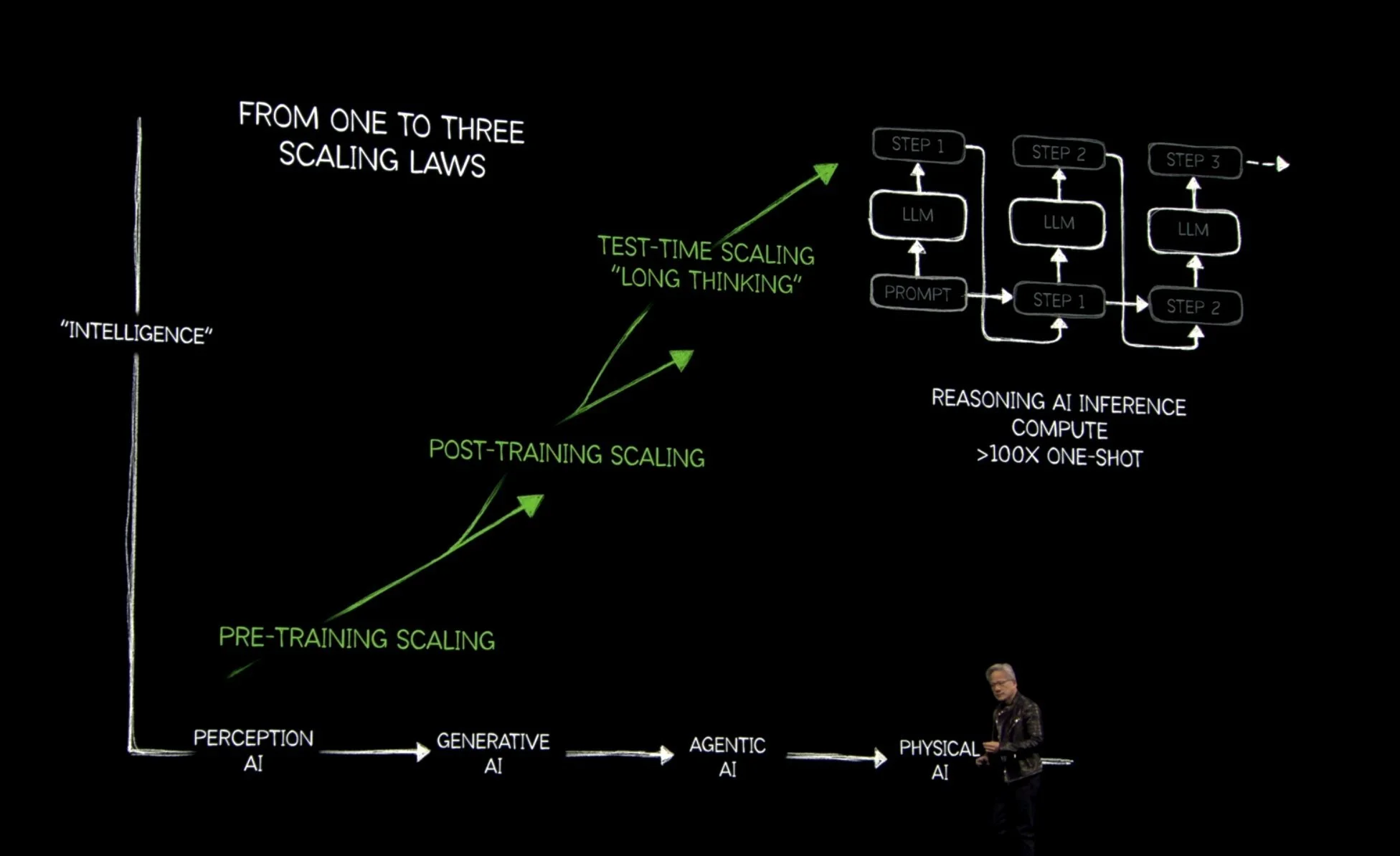

Nvidia’s AI GPUs are unquestionably expensive, but they lead the market in performance. Nvidia made a compelling case that even as some AI models have gotten more efficient, we are increasingly using reasoning models that produce better and more verifiable results — and this requires even more compute. There is certainly competition from hyperscalers developing their own chips, AMD, (potentially) Intel, and others, NVIDIA remains indispensable. This is because NVIDIA not only continues to push performance and efficiency on its GPUs, but it also has two other advantages: networking and software. Nvidia highlighted both at the keynote.

The only way to take full advantage of all that silicon is to be able to move data among the chips in sync and without dramatically increasing the power load just for the networking. At GTC 2025, NVIDIA announced new internal NVLink switches and Spectrum-X photonics networking gear designed with partners that reduces the amount of power spent on shuttling data around. There was also discussion of new types of data storage optimized for generative AI processes rather than traditional data center retrieval.

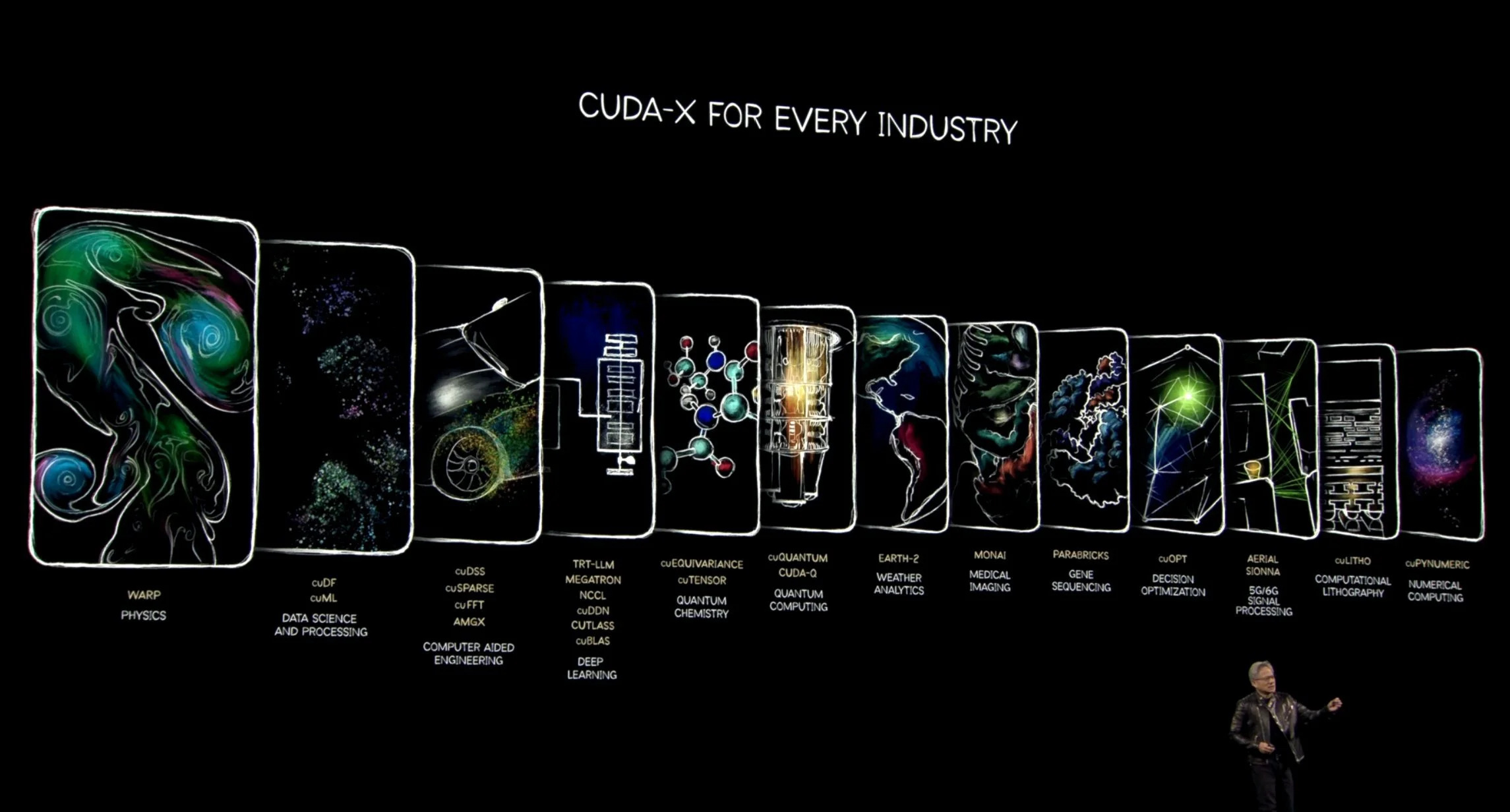

Underpinning it all is a steady investment in CUDA-X software libraries for both horizontal and vertical market segments for everything from physics and logic to weather prediction. There are even CUDA-X libraries for chip manufacturing lithography so NVIDIA can use AI to design its next generation of silicon by running AI on its current generation.

NVIDIA On the Desktop: Competing with Apple, Intel, AMD, and Qualcomm

NVIDIA’s need to maintain its software advantage explains the line of enterprise desktop hardware now called DGX: if you get developers and researchers to use your software architecture locally, the code they write for the cloud will be optimized for NVIDIA’s CUDA-X architecture there as well.

At CES, NVIDIA announced Project Digits, a tiny $3500 desktop computer aimed at AI researchers who wanted to be able to manipulate medium-sized AI models locally. Project Digits has now been renamed DGX Spark and will be shipping mid-year. It is being joined by DGX Workstations, which will come in laptop and larger desktop form factors from HP, Lenovo, Dell, and Asus. The DGX Spark has a GB100 Superchip, while the DGX Workstation will get a GB300, and up to 784 GB Unified System Memory so it should be able handle large LLMs.

DGX is not aimed at general purpose computing, at least not yet (NVIDIA tried and failed to target mobile platforms with Tegra in the past and the scars are still there). However, it is competing with other PC/workstation silicon vendors. Apple is clearly aiming at AI workloads with the Mac Studio M3 Ultra. The Mac may be the better choice for varied workloads, while CUDA-oriented AI should translate better to NVIDIA’s platform. Intel and AMD also build workstation chips, though they are constrained by a separate memory architecture for the CPU and GPU when AI models are easier to manage with unified memory like Apple’s M series and NVIDIA Blackwell.

Robots are Becoming Real Faster Than You Might Think

Nvidia also spent time talking about its CUDA-X libraries for robotics and how developers can use AI-based virtual worlds and advanced physics models to train robots quickly for applications in the real world. The primary rationale given for the impending market for AI-driven robots is to alleviate labor shortages, but NVIDIA’s vision for robotics is broadly automating anything that moves – cars, manufacturing, drones, and home health aides.

Despite the serious aspirations for AI-enhanced robotics, Jensen was handily upstaged by the entertainment robot built by Disney research when it toddled onstage looking like it had walked out of a classic Star Wars movie complete with sound effects by Ben Burtt. If some of the robotics videos featuring weirdly jointed robot workers looked scarily dystopian, the folks at Disney are building future bots that are straight up adorable.

For Techsponential clients, a report is a springboard to personalized discussions and strategic advice. To discuss the implications of this report on your business, product, or investment strategies, contact Techsponential at avi@techsponential.com.

*It’s mostly a myth. Levi’s wasn’t founded until the 1850’s as a dry goods business; one of his customers joined with him to patent riveted pants in 1873 to sell to Nevada laborers, not gold rush prospectors. But it’s a good story.

** A Vera Rubin system will have 1,300 trillion transistors across 12,672 CPU cores, 576 Rubin GPUs, 2,304 memory chips storing 150 TB, and 144 NVLink switches that transfer 1500 PB/s. My human brain has difficulty processing the scale of these numbers.